Introduction

When conducting geographic surveys of any sort, there is some level of accuracy that needs to be attributed to the data in use. When working with data acquired from an unmanned aerial system, there are varying levels of accuracy one can jump between depending on the intended application of the data. In this project, the level of accuracy between different data sets that are geolocated with varying methods will be analyzed relative to volumetric applications. The idea behind the results of this project would pertain to the overhead cost that mining companies would expect to pay when using a UAS to conduct a volumetric analysis. The overhead cost, in this instance, would fluctuate at varying levels of accuracy because the more accurate the data desired needs to be, the more an organization must spend on the technology and equipment to supply that data. The method that is being investigated as viable has just recently emerged within the industry as apart of the UAS arsenal, and that is a GPS device (GeoSnap) that georefernces the images taken during a UAS mission as they are being recorded. As means of comparison, this activity will asses the relative accuracy to the more traditional method of using ground control points and Tie-points. Ground control points call for the use of highly accurate survey grade GPS units to record the locations that can than be definitively visible in the images. During processing, these points can be aligned with the man made object that is thus called the ground control point. Having a high number and vast spread of ground control allows for a high degree of accuracy to included into the data. Similar to this method is by using tie points, which uses a dataset of the same extent that has been georefferenced with GCP's. With the two data sets, the user must then find natural or man made features and find the geolocation of those acute features in a specified coordinate system, and then apply those coordinates to the data that is not geolocated, and align them in a similar way that one would do with GCP's. The last method which this project is most interested in testing the feasibility of running the data without at any GCP's or tie points and relying solely on the Geo-snap on board GPS to provide the location for the data. It is assumed that the GeoSnap will not be as accurate as the method that incorporates GCP's, but perhaps it is still accurate enough to be used in volumetric mining applications, which would thus reduce the overhead cost of collecting such data. The sections that follow will discuss the varying accuracies of these data sets while also laying out the methods that produced the results.

Study Area

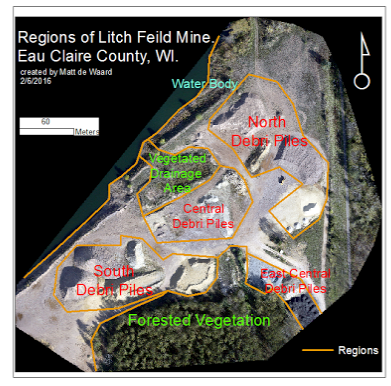

The area where this study will be conducted is at the Litchfield Mine, a sight that is operated by the Kreamer Brothers construction company. On this sight, there are a number of piles of varying material types and size. The area where the totality of the sites piles are held is enclosed by forest, a pond, and a bike trail. Apart from the pond, the property is enclosed on all sides by a fence. Figure 1 below shows a map of the the over all area of interest. The map was made using an orthomosaic that was captured using the DJI Phantom during the October flight mission.

|

| Figure 1: Study Area Map |

Methods

Data sets involved in analysis

For this comparative survey, 3 flights were used for this survey which employed two separate platforms on three different days. Here is a list of the flights ran

March: DJI Hexicoptor mounted with a Sony ILCE 6000 camera, set at 12 megapixel resolution. For processing one flight used TiePoints, and another project was created where only the GeoSnap was used for geolocation.

March: DJI Hexicoptor mounted with a Sony ILCE 6000 camera, set at 12 megapixel resolution. For processing one flight used TiePoints, and another project was created where only the GeoSnap was used for geolocation.

In an ideal situation, all of these data-sets would have GCP's attributed to them as a means for comparing the data to the same area that is not further georeferenced, but unfortunately on the day of the March 28th flight, the TopCon survey GPS was not working. Because of this, tie points from the previous data set in October would have to be used to provide a more accurate georefference to the March flight. As such, the first step to prepare the data for comparison is to process all of the data sets. For the October flight, the images were processed with GCPs which were allocated using GCP/tiepoint manager application in Pix4D. After these GCPs were allocated, and all processing steps were complete, it was necessary to find features/objects present in both the March and October data to use as tie-points. This is important because the March data does not have GCPs, and by using objects (tie-points) that are in the same location in as the October data will provide the improved geoaccuracy attributed to the October data to the March data. For comparative purposes, another dataset was process with March data, but with no added tie points. The fourth and final data set that will be apart of this dataset is the data collected by the phantom platform in March. This data set has more GCPs than the phantom data from October, and the range of the GCPs is much broader.

Once the October data was georeferenced and the March data has completed initial processing, the tie points could be applied. This process involves combing through both sets of imagery and identifying objects in the same location. Once these object locations were found, a table of their locations was made based of of the UTM coordinates from the October data. That table was than transferred into Pix4D and combined with each of the imagery from the flights in March.

Finding and Applying Check Points

In order to check the positional accuracy between each set of data, more features that are in the all the data sets must be identified and have their positions recorded from the October data. This process began by identifying 6 features with an adequate spread around AOI and recording their UTM location in the X,Y and Z directions. These features were difficult to find, given that it was already a struggle to find matching features to use for tie points for the March data. With that being said, the features were identified and applied. The location of these points will be shown, relative to the GCPs used in the relevant data sets, in the results section (figure 2)

Results and Discussion

The results produced by this project yielded by this study were largely within the realm of what was expected in terms of the accuracy that was assigned to the various data sets based off of the processing method that was used for their geolocation. Below, in figure 2, is a visible representation of the offset between the check point feature and the processed location. below figure 2 are two tables that show the residual difference for each check point feature relative to the October data as well as the RMS error associated with each plane of direction (XY plane and Z plane)

|

| figure 2: Maps and visualizations of discrepancies between processed point and feature (bridge corner) |

|

| figure 3: figures associated with May GCP and October GCP accuracy |

|

| Figure 4: Figures associated with October GCP data and May tie point data accuracy |

|

| figure 5: Figures associated with October GCP data and May 'no tie point or GCP' data accuracy |

What was most shocking from this data is the fact that there was very little difference in overall accuracy between the data that used tie points to the one which only used the GeoSnap (Comparing figures 4 and 5). The only thing that made the GeoSnap data a little less accurate was slightly a higher discrepancy in Z values. What perhaps this exemplifies to us is how using tie points to provide increased geolocation can cause issues. The reasons this could be are as follows

1. Tie points allow for more room for human error during the alignment process during initial processing.

2. In many instances, like this one, its difficult to find features that can be identified in both datasets across the whole extent of the AOI. Clusters of these tie points relative to areas where there are none will cause irregularities.

3. As a user becomes more desperate for features to use as tie points, they start to use features with less acute features, which causes the tieing-down-process to be less effective.

Their are ways to account for this accuracy drop off. One way is to implore a camera or camera setting that uses a higher pixelation value, which would allow for features to be seen better and larger scales, allowing the user to allocate tie points better. Overall though, this process is not nearly as trustworthy as using GCP markers, but often time requires the same resource expenditure associated with data processed with GCP's, because at some point, the data had to have been geolocated using GCPs.

The GCP method is much more reliable and increasing the accuracy of the data because the collectors have total control of the spread and the amount of points they chose to use. This flexibility allows for ample spread to be applied, where as with tie points the users is highly limited to what features are distinguishable in both sets of data.

1. Tie points allow for more room for human error during the alignment process during initial processing.

2. In many instances, like this one, its difficult to find features that can be identified in both datasets across the whole extent of the AOI. Clusters of these tie points relative to areas where there are none will cause irregularities.

3. As a user becomes more desperate for features to use as tie points, they start to use features with less acute features, which causes the tieing-down-process to be less effective.

Their are ways to account for this accuracy drop off. One way is to implore a camera or camera setting that uses a higher pixelation value, which would allow for features to be seen better and larger scales, allowing the user to allocate tie points better. Overall though, this process is not nearly as trustworthy as using GCP markers, but often time requires the same resource expenditure associated with data processed with GCP's, because at some point, the data had to have been geolocated using GCPs.

The GCP method is much more reliable and increasing the accuracy of the data because the collectors have total control of the spread and the amount of points they chose to use. This flexibility allows for ample spread to be applied, where as with tie points the users is highly limited to what features are distinguishable in both sets of data.

Conclusion

Summary of average RMSE results:

8 GCPs to GeoSnap: .656 meters

8 GCPs to TiePoint : .653 meters

8 GCPs to 15 GCPs: . 260 meters

Based off of these findings, if a company or organization is comfortable with having a potential discrepancy around .7 meters, using a UAS with a GeoSnap or a similar on bored GPS unit will suffice as means to cut labor and equipment cost. However, if that accuracy does not provide adequate conditions to conduct volumetric analysis, the best scenario to use data that incorporates GCP's into the processing of the data. One thing that is almost certain, is that these UAS based GPS devices will only get strong and more accurate, and will be able to provide ample accuracy without needing to apply ground control points/tie points. This will prove to be huge because not all AOI's ace the same. The Litchfield Mine is overall, very flat, with little vegetation, and has a lot of free space to place GCPs. Its unfair to assume that this will always be the case, and as such, its good to know that their is technology available that can provide sub-meter accuracy in a 3 dimensions