Introduction

One of the most sought after skills in the world of UAS are those who can process imagery data that has been collected and compile the data in maps that are easy to read and understand by people outside of the industry. As a practicing geographer, simply posting pictures of areas should be avoided at all cost, and any time we do post a picture an image it should be tide together with a map that contains a spatial reference, north arrow, and a legend. The following activity is an introduction of how to process data collected from a UAS device, interpret patterns, and describe those patterns in a concise way. This activity will be conducted in a template format, where a question provided to us from Dr. Hupy will be posted in bold letters and the subsequent answers below them in normal font.

Methods

In this section, students will be copying and pasting a folder into your own student folder that contains a series of examples of UAS data. Each bullet will be a section on working with the data and creating maps from that data. You will then have questions that you will paste into your report with an answer.Flash Flight Logs

Flash Flight Logs are KMZ files that represents that different executed maneuvers of a UAV mission. The file is broken up in terms of the different behaviours of the device i.e. loitering vs auto-pilot flight.What components are missing that make this into a map?

In this view, we see the flight path of two different paths composed by a few different layers that correspond to the specific activity associated with the device during a certain portion of its flight. To make this a map, there would need to be a scale representation of some sort, a north arrow for directional reference, and a legend to tell viewers what it is they are seeing. Without these items, there is no spatial context to refer to, which in geographic terms, is something you never want to allow.

What are the advantages and disadvantages of viewing this data in Google Earth?

Viewing this data in Google earth has advantages and disadvantages. In terms of advantages, it is very quick and simple, which is good if one only wants to get a quick glimpse at a flight path, especially if they are already familiar with the nature of the flight and what it was conducted for. In terms of disadvantages, however, in google earth you can only view the physical path/height of the path, and no other attributed information that would otherwise be available if you opened the program. Such elements like a scale bar, north arrow, or a legend, the essential elements for creating a map, are not available, which means you cant present this data in any sort of professional or educational setting with any level of credibility.

how do you save a kmz file as a kml file?

Right click on the the 'flight path auto' layer and chose to save to my places as.... You then go to the location you wish to save the file and change the file type to kml instead of kmz, which are your only two options.

Go into ArcMap, bring in imagery base data, and import the kml into ArcMap. How do you do this?

There is a tool called KML to Layer. It was used and the output location was my lab folder in the Q drive. The subsequent map can be seen in figure 1 below:

|

| Figure 1 |

Telemetry Logs

Telemetry Logs is a file created during the flight of a UAV when using Mission Planner software. The telemetry log file contains detailed information about speed, pitch, yaw, and other components that were apart of a mission.

When mission planner is opened up, the home interface has a set of tabs on the left side of the screen, one of those tabs is a tool labled 'Tlog > Kml of Graph'. This tool allows you to do a number of things, but in this instance, it was used to created a KML out of a telemetry log. The subsequent file created is a KMZ file that includes multi-path, multi-point, and multi-patch elements. Using the same 'KML to layer' tool used in the Flash Log conversion. The resulting map shows the layer created from that telemetry log based KMZ file.

|

| Figure 2 |

GEMs Geotiffs

A geotiff is a raster file where each cell is geolocated. Along with the pixel, the file contains all the information needed to place cells in their accurate locations based on different mapping projections and datums

Calculating the statistics for any raster based file allows for you to subsequently reclassify the raster layer to show the prevalence of different cell values. In this case, the cell values represent varying levels of the electromagnetic spectrum. The data was collected through the use of varying sensors mounted on a UAV device. Figure 3, below, shows the 5 Geotiffs in conjunction with one another, after having their statistics calculated in ArcMap.

|

| Figure 3 |

Pix4d Data Products

Pix4d data products use unique software to conducted advanced photogrammetric operations to be conducted on raster data collected by UAVs. Using the software, one can create orthorectified images (orothomosiacs) as well as digital surface models (DSMs).

A digital surface model is raster data set that models the earth surface, including the objects that are on top of the terrain. Attributed to each cell is the height of surface for the given area the file covers. In contrast, an orthomosiac is composed of multiple raster files mosaiced into one image that covers a given area. These combined rasters are orthorectified to remove any distortion by means of projecting the data in a coordinate plane, and apply z values to the the image, removing distortion caused by scale variation. Once this process is complete, the entire image looks like the photo was taken from a sensor directly above. This opperation is done by combining multiple images that slightly overlap each other. Meanwhile, A DSM need only one snap shot of the surface to gather the information necessary for that given area.

Go into the Properties for each DSM and record the descriptive statistics for each. What are those statistics? Why use them?

These calculated statistics tell us the min, max, mean, and first standard deviation for the totality of the cells that make up the DSM raster file. This information could be use in comparative circumstances, where one wanted to compare the same area at different times. Linking those changes to where they occur on the image would than give you a good indication how that areas landscape is changing. Is the entire area losing top soil from erosion (lower mean)? Or is there a pile of sand that keeps on growing (higher max)? Knowing these values and having two points of comparison over a given time period allows you to process the information and reach conclusions about what changes are occurring in a specific landscape

Hillshade the DSM images. How did you do this? Delineate regions of the DSM, thinking of each region in terms of topography, relating that to the vegetation.

To apply a hillshade to a DSM all one must do is go to properties - - symbology, and check the box next to 'use hill shade effect'.

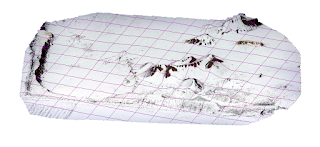

Open ArcScene and bring in the DSM and Orthomosaic for flight 2 of sivertson mine. Then set the base ht to the dsm. Display the ortho in 3D, along with the DSM. Explain what this information needs to become a map. How might one do that? That is, list out the criteria needed for this to become cartographcally correct.

In order to turn this newly created 3d surface model into map, one would need to apply the essential map elements that provide the spatial context that need to be apart of its presentation as a credible map. Such elements include north arrow, scale indicator, legend, and a title. Because this is a 3d model, however, applying a scale becomes difficult because you have varying surface heights which means there is no constant scale, it is varying depending on surface height. One thing that you could do is apply a fishnet over the surface, where each full grid-square represents the same amount of area. Squares closest to the viewepoint will be larger than those further away from the viewpoint will be smaller, eventhough they cover the same amount of area. figure 4 below exemplifies this type of scaleing.

|

| figure 4 |

Results

T-Logs: In reference to figure 1, above, the pattern illustrated by the flight log 'auto-pilot' path has the characteristics of a multirotor. For instance, if you look at the flight path you notice that the turns are very sharp and square. To describe this, the device takes long north/south trips, turns on a sharp right angle to the east, goes for roughly 30 meters, and then returns to its north/south path. In total, the flight ran 4 north/south runs, and the end of the forth, the device stopped and turned back and went directly back to the starting point of the flight. If this flight path was that of a fixed wing that, the turns would be much more rounded and would likely take 60 until the device was completely going the opposite direction from whence it just came. The 30 meter gap between the north/south runs conducted by the multirotor indicate that this flight was done at relatively higher altitude. If the flight was conducted lower to the ground, there wouldn't need to be such large gaps between the north/south runs because the device would have to move a shorter distance to ovoid producing an unnecessary overlap in the images being taken.Geo-Tiffs: How does the RGB image differ from the base map imagery. What is the difference in zoom levels? How does this relate to GSD?

The RGB geotiff from the GEMproducts file provides a much more rich and contrasting tones and colors in comparison to the ArcMap basemap imagery. If you zoom into the border areas where the geotiff ends and all there is is the background basemap imagery, one can see that the geotiff retains a much higher level of detail at closer zoom levels. In terms of a ground sampling distance, because the pixel size is smaller you could use a smaller object to create a scale reference for your image.

What discrepancies do you see in the mosaic? Do the images match seamlessly? Are the colors 'true' Where do you see the most distortion.

Within the the geotiff it's hard to see the detail in portions of the image where the surface has a brighter surface or lighter color associated with it. This image, shows the surface of the AOI in true color, in that the image shows the RGB colors of the ground surface. There are also a few places where the overlapping areas seem to contrast slightly in brightness, and don't flow seamlessly into the next frame.

Compare the RGB image to the NDVI mosaics. Explain the color schemes for each NDVI mosaic by relating this to the RGB image? Discuss the patterns on the image. Explain what an NDVI is and how this relates.

NDVI = (near infrared - visual light)/(near infrared + visual light)

A NDVI is also known as a normalized difference vegetation index. The index is often created on a scale of -1 to 1 where values closest to -1 are water and values approaching 1 are healthy, lush vegetation. The color scheme used to symbolize an NDVI is subjective to the user, as they can classify it depending one what values they want to highlight. In the file NDVI-FC1, we see color scheme contrasting from bright red to dark blue. In contrast to the RGB image, the areas with red are are the areas of the RGB image which are heavily shadowed and the areas that are darkest blue are the roads. reclassifying the mono NDVI shows that areas with the highest NDVI value are the areas with shadow, and the lowest values are the road and random areas throughout the vegetation. This pattern remains consistent in each mosaic.

The shadows show high NDVI values because there is little or no visible light being emitted from that area, but near IR is still present. Transversely, in areas like the road wither you have very low values, the concrete is very light and thus is giving off a high amount of both RGB light as well as near IR. The healthy vegetation within the garden can be seen by values giving off high near IR values, which is an associated quality of healthy plants, they absorb most visible light and reflect a large amount of near IR wavelengths.

Orthomosiac/DSM: What is the difference between an orthomosaic and a georeferenced mosaic?

An orthomosiac are a series of images that have been combined into one image and also orthorecitified to remove overhead distortion. Subsequently, each pixel is precisely geolocated in both the X,Y and Z plane. This is done by mosaicing images with at overlapping points and combining that mosaic with a point cloud in the from of a digital surface model file, providing elevation values as well as XY coordinates. A georefrenced mosaic is an orthorefrenced mosiac but it has no DSM as apart of it, and thus has no Z values. As a result if you were to use a georeferenced mosaic to calculate the distance between two points, you would get an inaccurate measurement because the distance calculation would only take 1 dimension into consideration.

What types of patterns do you notice on the orthomosaic and DSM. Describe the regions you created by combining differences in topography and vegetation.

To area of interest, Litch Field Mine, has a number of different of regions based of of both land cover and topography. Below, in figure 5, one can explore a map showing the distinctive regions of this site

|

| Figure 5 |

Refering to the map in figure 5, much of the areas with higher topographic features are composed of man made piles of unknown materials. The areas of higher topography are marked by 3 distinct regions and are labeled by their relative cardinal direction. Of all the pile regions, the only area that seems to contain piles of a different material than the rest, is the east central region, where we see a pile of black material while the rest of the piles are composed of manily sandy colored material. Along the entire Northwest edge of the image frame, we see that there is a body of water, and opposite of that edge of the frame, to the south east, we see that there is a forested area. Right next to the water body, in the center of the frame, we see what appears to be a drainage area, where any liquid that that remains above the surface would eventually flow toward, and ultimately end up in the water body we see at the edge of the frame. In that little drainage area, we see that there is a low level of vegetation growing.

No comments:

Post a Comment